This article is the second in a three-part series exploring questions about AI. Read Part 1 here.

Large language models (LLMs) have become a potent force in artificial intelligence (AI). Trained on large amounts of text, they can generate content, translate languages, answer questions, and more — while sounding surprisingly human.

These models can write poetry from the perspective of Bugs Bunny in one minute and write a summary of legal compliance documents in the next.

But how does all of that stuff get inside an LLM?

It’s a very similar approach to a handwriting detection model, but on a much larger scale. Where a handwriting detection application could have 784 inputs, GPT-4 has approximately 1.5 trillion.

This massive scale of inputs is required because it’s a model trained on a wide range of subjects, not just handwriting. The goal of this type of Generative AI is to take text input (a prompt) and generate a response in the form of natural language (how we talk to each other).

To do that, we have to collect and classify a veritable “metric ton” of language humans have already created — and what better resource to turn to than the internet?

Training LLMs on a whole lot of human language

The exact amount of data used to train LLMs is unknown. Estimates range from a few hundred GB to tens of thousands of GB. But with all internet-delivered “human text,” we start training the model with a series of forward and backward passes, taking in the training data and comparing the results to an expected outcome using a neural network.

Ideally, each training pass yields an outcome closer to our expected outcome. And voila, the result is a model that understands the likely position of every character or group of characters (tokens) based on any given context of language.

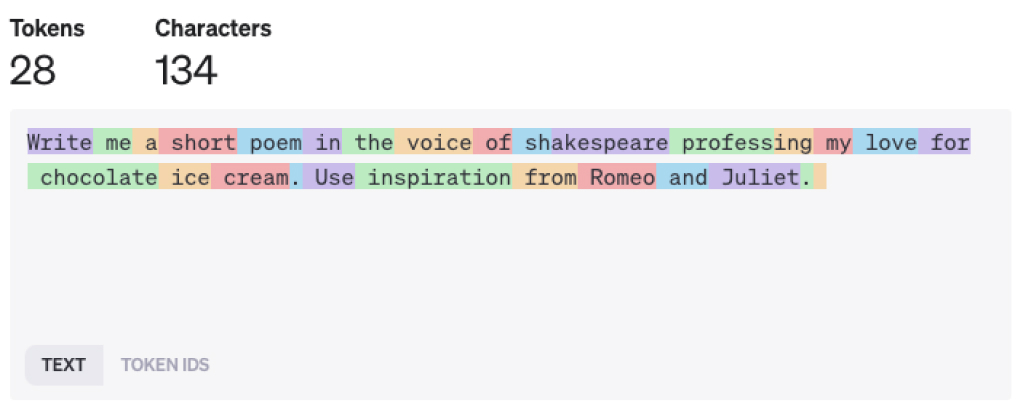

To illustrate what this means, let’s look at a sample prompt:

Write me a short poem in the voice of Shakespeare professing my love for chocolate ice cream. Use inspiration from Romeo and Juliet.

After running the prompt through OpenAI’s tokenizer it looks like this. Each color segment is a token in the image below.

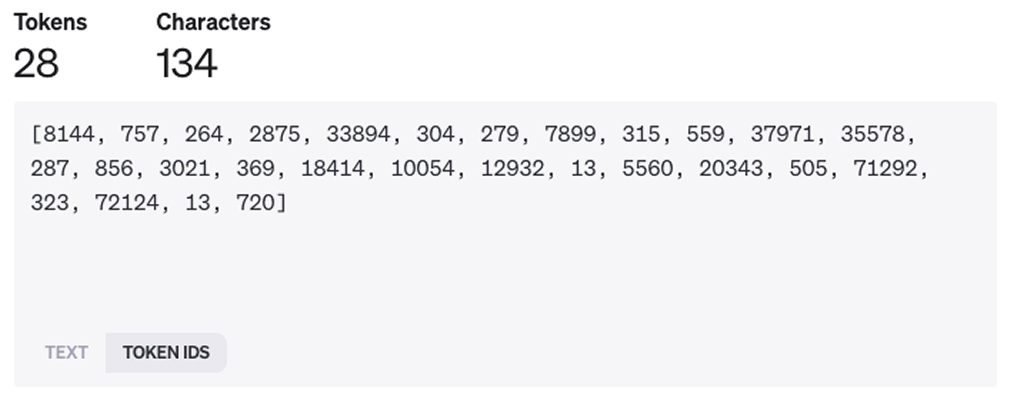

And here, every token has a number assigned to it.

The model will work to understand each consecutive token — the token that appears after 72124 (Juliet), 323 (.), and so on. Once it generates the next token, the entire block of tokens (including the newly generated one) is sent back to the model to determine what the next token is. It will iterate this way for each new token until it reaches the limit of its response.

That’s how LLMs work.

An LLM is a huge language prediction machine backed by a ton of computing power simply predicting what token to show next. It’s also an energy-hungry neural net so massive it requires the power equivalent of roughly 18,000 average U.S. households of energy over 100 days.

(By contrast, the human brain consumes roughly 99.99994% less energy than a GPT-4 training cycle within that same 100-day period. The comparison isn’t exactly 1:1, but it illustrates the scale of these systems.)

Takeaway

While understanding how LLMs work may feed your inner geek, it can also serve as a key ingredient in understanding a range of future implications, from energy consumption to using them effectively and responsibly.